Exams and online courses: why proctoring is the wrong issue

Many instructors struggle with assessment of any kind, but particularly with online assessment if they have never taught online before. What they try to...

Third edition of Teaching at a Distance is now published

The third edition of Teaching in a Digital Age is now available from here: https://pressbooks.bccampus.ca/teachinginadigitalagev3m/

I have exported in several formats and made it public....

How to address the skills agenda in higher education: a German perspective

Ehlers, U-D. (2020) Future Skills: the future of learning and higher education Karlsruhe, Germany: Herstellung und Verlag, pp. 311

What is this book about?

This free,...

What is the difference between competencies, skills and learning outcomes – and does it...

Bumping into neighbours outside a branch public library can sometimes stimulate a lot of thought. I ran into a fellow consultant (our neighbourhood is...

From access to digitalisation: the changing role of online learning

I was away last week in Denmark, helping with the development of a new business degree at Aalborg University. I was there to do...

Using learning analytics to predict mastery: a flawed concept?

I'm slogging my way through the special issue on learning analytics in Distance Education, a subscription-only journal, because what is happening in this field...

Second edition of Teaching in a Digital Age now published

The second edition of Teaching in a Digital Age is now available at https://pressbooks.bccampus.ca/teachinginadigitalagev2/

The first edition was published in 2015. The second edition is...

2018 review: 21st century knowledge and skills

How to develop the knowledge and skills that learners will need in the 21st century was a growing topic of discussion not just in...

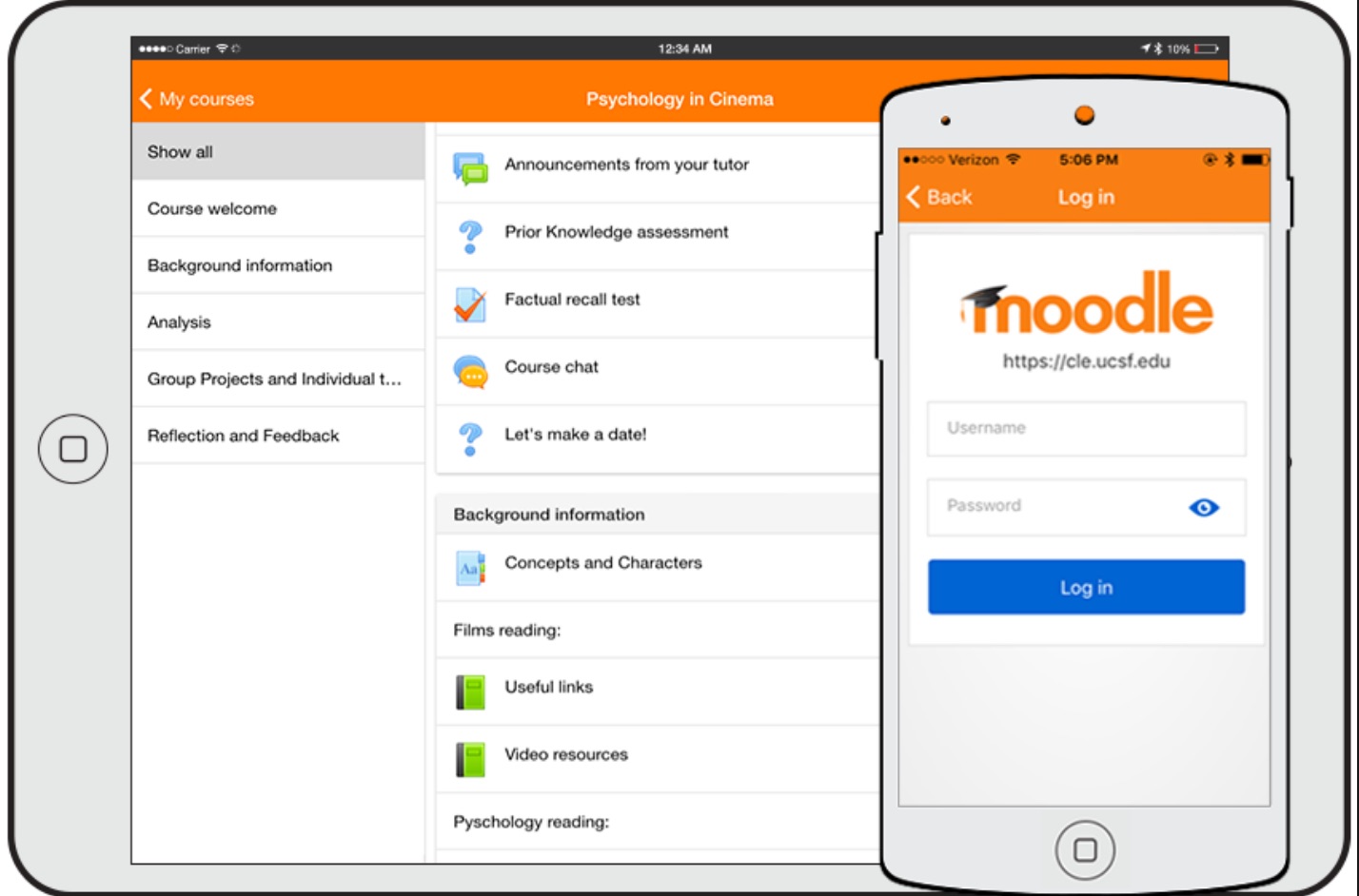

Why is innovation in teaching in higher education so difficult? 3. Learning management systems

Reasons for using a Learning Management System

I pointed out in my previous post that the LMS is a legacy system that can inhibit innovation...

How serious should we be about serious games in online learning?

In the 2017 national survey of online learning in post-secondary education, and indeed in the Pockets of Innovation project, serious games were hardly mentioned...