Third edition of Teaching at a Distance is now published

The third edition of Teaching in a Digital Age is now available from here: https://pressbooks.bccampus.ca/teachinginadigitalagev3m/

I have exported in several formats and made it public....

Book Review: Reimagining Digital Learning for Sustainable Development

Jagannathan, S. (ed.) (2021) Reimagining Digital Learning for Sustainable Development: How Upskilling, Data Analytics and Educational Technologies Close the Skills Gap Routledge: New York/London,...

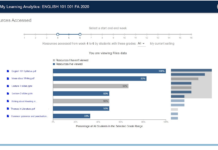

Let students see their course data: a learning analytics project from the University of...

Love, J., DeMonner, S., and Teasley, S. (2021) Show Students Their Data: Using Dashboards to Support Self-Regulated Learning Educause Review, July 20

Learning analytics (LA)...

Recordings of Contact North/ATD’s Learning and Technology Conference now available

The conference

I'm still catching up on all the posts I meant to write during 2020 but have been too busy to do.

Contact North and...

Five webinar recordings on the myths and realities of online learning now publicly available

About the webinars

Contact North has now made publicly available recordings of the five webinars on the Myths, Realities, Opportunities and Challenges of Online Learning...

Two webinars on the future of online learning next week

I am offering two quite different webinars next week on the future of online learning.

Contact North

This will be the last in my series of...

Online learning in 2020: perfect vision in the Year of the Rat

No, no firm predictions from me for 2020 other than more of the same from 2019, plus something unexpected. However, I will suggest three...

Learning analytics – or learner surveillance?

Crosslin, M. (2019) Is Learning Analytics Synonymous with Learning Surveillance, or Something Completely Different? EduGeek Journal, October 30

This is an excellent, thoughtful article about...

Learning analytics in online learning: trying hard but need to do better

I have now covered all five main articles in the special August issue of the journal Distance Education on learning analytics (because it is...

MOOCs and student data privacy: a sentiment analysis

Are you still with me as I plough through the special edition on learning analytics from the journal Distance Education? I hope so. I...