AI Teaching Assistant Pro

I am in the process of evaluating a suite of AI tools from Contact North, Ontario, for students, instructors and administrators.

Contact North offers a suite of six AI tools for teachers/instructors. In four previous posts, I evaluated

- a tool for generating multiple choice questions and answers.

- a tool for generating essay questions and a rubric for answers

- a tool for building a course syllabus

- a tool for building slides

- a tool for creating short, talking head videos (Learning Shorts)

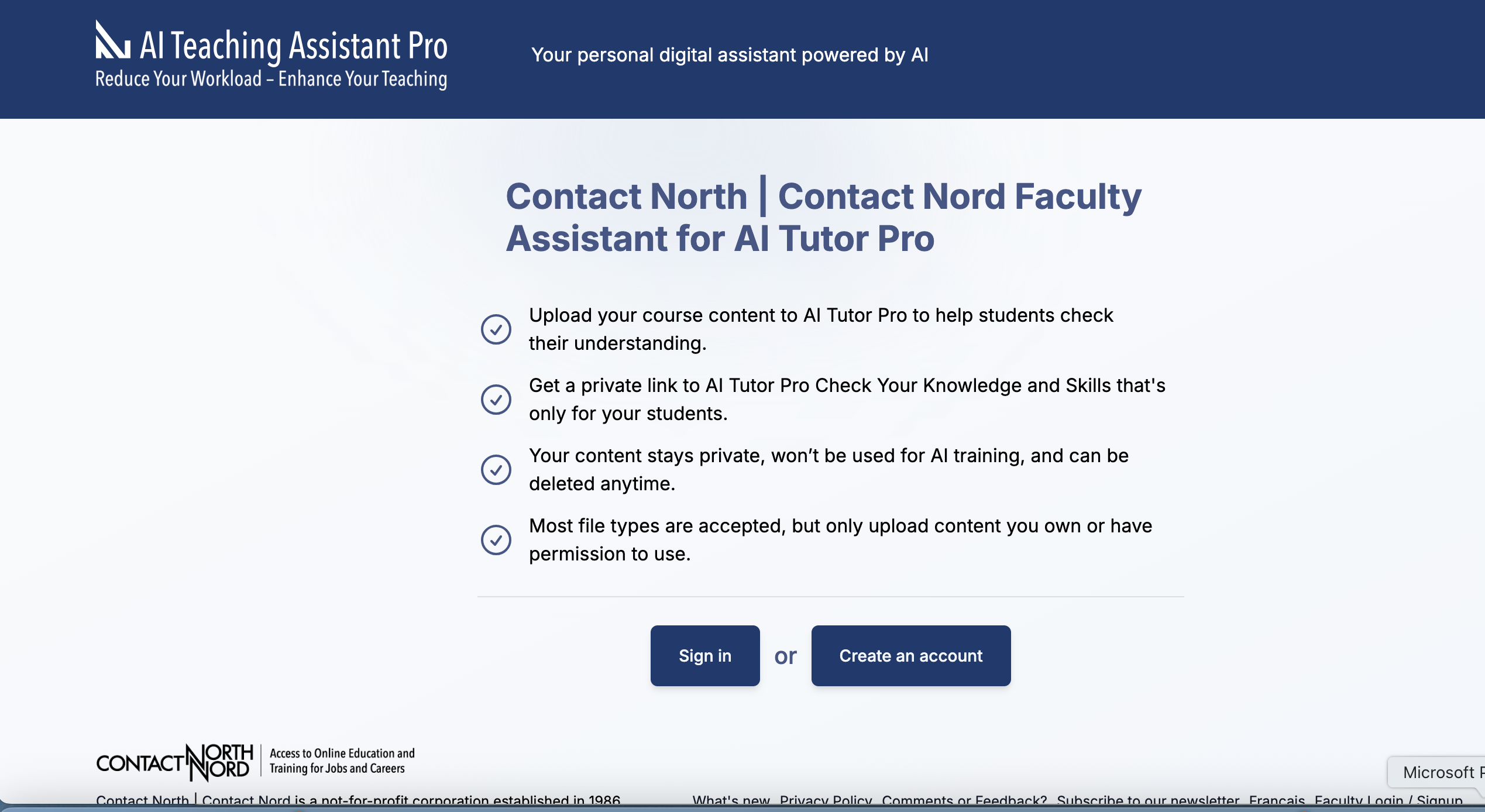

In this post, I will examine another tool for teachers and instructors, a Faculty Assistant.

What does Faculty Assistant do?

I’ve always wanted an assistant – someone to get me coffee, answer administrative phone calls, make travel arrangements for me, proof-read my research papers and deal with editor’s queries, tell my boss I’m out on an important mission (when I’m on the golf course), and so on. So who wouldn’t? With this in mind, let’s have a look at Contact North’s AI Faculty Assistant, which can be found under Contact North’s AI Teaching Assistant Pro.

So what does it do? Basically, this tool asks the instructor to upload a document and then an AI bot quizzes students about the contents of the document. Instructors are allowed to upload a maximum of 10 files (there is a limit on the size of each file – an article or a paper is fine, a whole book is not). The tool stores the document(s), then students are directed to Faculty Assistant. Once a student signs in, Faculty Assistant will give the student links related to the stored document(s). This initiates a bot that then asks questions about the content of the file, and provides feedback to the student on their responses. It does not however grade or assess them. In other words, it’s like a homework reviewer for students.

What I did

I uploaded two documents.:

- the first was Section 10 of Chapter 10 on ‘Deciding on which media to use’ from my book ‘Teaching in a Digital Age.’ The previous sections in this chapter had suggested a set of criteria called the SECTIONS model as factors to consider when deciding on media for teaching. Section 10 however focuses on how to use the model and in particular compares a deductive reasoning approach with an inductive reasoning approach, and recommends the latter.

- the course outline, outcomes and notes for the Count of Monte Christo that Teacher Pro had previously generated for me through another Teaching Assistant Pro tool, Syllabus Builder.

I then played the role of a student, answering the questions the bot asked about each document.

1. Target group (Scale 0-5)

Is it clear who should make use of these tools and for what purpose?

Once again, yes and no. I think the intent is for the bot to play the role of a Socratic tutor, so that the instructor does not have to do this. However, this depends on the students being willing to be tutored by a bot. The big question is: is this an adequate substitution?

I give this a score of 3 out of 5 on this criterion.

2. Ease of use (Scale: 0-10)

- Is it easy to find/log in? To some extent. If you are a first-time user, you will be asked to set up an account with an email address. This process, although simple and quick, may take an hour or so before you are finally registered, but once registered you are good for all the tools. However, instructors will need to send instructions to their students to use the bot, by clicking on a url.

- Does it provide the necessary information quickly? Once the document was uploaded by the instructor, the bot quickly provided questions and responded with feedback to students’ answers.

- Is it easy to make use of the questions and answers it provides? Students can copy and transfer each session to a Word or similar document for later use, but there is no instruction for students on how to do this. They will need to work this out for themselves.

I struggled a little with this tool, not because of the interface or usability, but because I wasn’t sure at the outset what the tool was supposed to do. However, I think both instructors and students will manage to work out what to do with a little practice. (Whether they will want to do this is another question). Also, as with all bots of this kind, it wasn’t clear to me when to stop. It just keeps going until you are worn out or bored.

I give ease of use a score of 6 out of 10, mainly because its purpose is not immediately evident.

3. Validity and comprehensiveness of information provided

- How valid and comprehensive was the information provided, given the topic? Once again, the coverage of content was extensive. However, I suspect that the bot does not limit its responses just to the content of the uploaded document, but searches more widely on the internet. In the case on the section from my book, it ranged more widely than just on the decision-making process in Section 10 but also drew on other sections of the chapter that I had not posted to the bot. Also, it said I was wrong when, in response to the question: What is the difference between deductive reasoning and inductive reasoning in the context of selecting media for education? I answered the inductive method is better when choosing media, which was the whole point of this section. Instead, it merely repeated the differences in definition. AI is not good at nuances, which are sometimes critical to understanding.

- Does it provide relevant follow-up questions or activities for instructors or students? It does the Q and A well, but does not offer alternative sources or activities.

I am giving this 7 out of 10, mainly for the comprehensiveness of the responses. It lost points on precision.

4. Likely learning outcomes (Scale: 0-10)

- provides accurate/essential assessment on the topic/question (0-3 points) Assessment is not linked to this tool, although feedback is given, and the separate tools for multiple choice or essay questions could be applied separately, but this will need some preparation and input from the instructor. Score: 1 out of 3.

- students will learn key concepts or principles within the study area/topic (0-3 points) Maybe. The issue here is motivation. Will the students want to work through this process? I got bored quickly but students might not. Score: 2 out of 3

- enables/supports critical thinking about the topic (with: max 5 points) or without (max 3 points) good feedback. No, the emphasis is mainly on comprehension and memory. Score: 1 out of 3

- motivates the instructor/student (1 point). If an instructor believes that students need a homework monitor, or wants to offload their interaction with students, then they will want to use this tool. However, if they believe direct interaction with students is an essential part of their work, they will not like this tool.

Total score: 5 out of 10

5. Transparency (Scale: 0-5)

Where do the topics come from? Who says? Does it provide references, facts or sources to justify the choice of topics? What confidence can I have in the information provided?

Since the instructor provides the source for the interaction between bot and student, in the form of an uploaded document, it should be transparent, but I am not convinced that the bot draws solely on the uploaded document. I give this 4 out of 5 for transparency.

6. Ethics and privacy (0-5)

I’ll be blunt. I don’t think this is the kind of activity that an instructor should delegate to a bot, although I can see it could be useful as an alternative or addition to instructor-student interaction, and for students who have a lousy instructor, this could be a very useful alternative. (But then, would a lousy instructor bother to set this up for students?)

This tool requires both students and instructors to register. Contact North has a clear policy on the ‘home’ web site about student/tutor data and privacy.

I give this a score of 2 out of 5.

7. Overall satisfaction (Scale: 0-10)

Well, this tool did not provide me with the kind of assistance I was hoping for at the beginning of this review, but that is not a valid criterion. Does it do what it sets out to do? Yes, it does. It provides an automated Q and A session on any topic (or rather document) of the instructor’s choice.

However, I have to question whether this is the right thing to do by an instructor. At least in my area of expertise, interaction with students is the most important thing I do. In terms of my expertise, interaction is often necessary to help students with critical thinking, feedback that takes account of their particular needs, and ensuring a sense of belonging to a community of learners, both through group and individual interaction. In particular I can help in areas that are nuanced or where there are conflicting approaches or viewpoints or where students may have strong feelings.

At the same time, if this is not how you see your job as an instructor, and if you want to ensure that students have plenty of work to do on the content of a course so that they have a clear understanding of the content, then this will be a useful assistant.

Once again, this tool focuses mainly on the content to be covered.. Instructors will still need to do the work of ‘opening up’ the content, providing suitable learning activities, and setting appropriate assessment tasks.

I am giving this an overall 4 out of 10 in terms of satisfaction.

Overall evaluation

I give this a total score of 31 out of 55 – roughly 56%. This is a useful tool for giving students busy work and providing an alternative source of activity to in-person instructor-student interaction. For an instructor with a very large class, this tool could be particularly useful.

My concern though is whether students will find this a satisfying experience. It is the bot asking the questions, not the students. As a student, will it, like the bot on my telephone company’s customer service web site, ignore MY issues? Also, I question the attempt to automate the most important human side of teaching, engaging with students around the core elements of a subject discipline. This may be acceptable in the quantitative subject disciplines, but this tool will not result in innovative, critical thinking, problem-solving learners. But who needs those these days?

Over to you

Have you used this tool? How useful was it to you? What are the drawbacks? Please use the comment box at the end of this blog post.

Up next

I will be assessing the tool AI Pathfinder Pro within the next seven days.

Dr. Tony Bates is the author of eleven books in the field of online learning and distance education. He has provided consulting services specializing in training in the planning and management of online learning and distance education, working with over 40 organizations in 25 countries. Tony is a Research Associate with Contact North | Contact Nord, Ontario’s Distance Education & Training Network.

Dr. Tony Bates is the author of eleven books in the field of online learning and distance education. He has provided consulting services specializing in training in the planning and management of online learning and distance education, working with over 40 organizations in 25 countries. Tony is a Research Associate with Contact North | Contact Nord, Ontario’s Distance Education & Training Network.