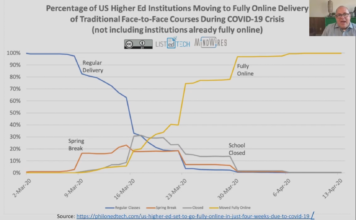

Two webinars on the future of online learning next week

I am offering two quite different webinars next week on the future of online learning.

Contact North

This will be the last in my series of...

Online learning in 2020: perfect vision in the Year of the Rat

No, no firm predictions from me for 2020 other than more of the same from 2019, plus something unexpected. However, I will suggest three...

Using learning analytics to predict mastery: a flawed concept?

I'm slogging my way through the special issue on learning analytics in Distance Education, a subscription-only journal, because what is happening in this field...

Chapter 8.7c Artificial intelligence

I am in the process of finalising a second edition of Teaching in a Digital Age. (For more on this, see Working on the second...

Athabasca University moves to the cloud

I'm struggling to understand how artificial intelligence is going to impact on post-secondary education, and in particular on online learning, at least over the...

A discussion on the risks and benefits to education of AI and automation

I was interviewed recently by Cecília Tomás, a Ph.D. student at Universidade Aberta, the Portuguese Open University, for her thesis on the Internet of...

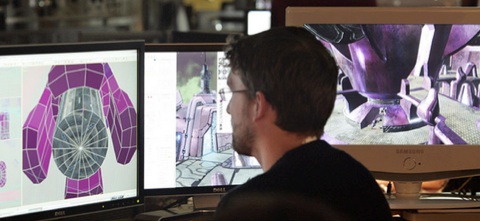

How serious should we be about serious games in online learning?

In the 2017 national survey of online learning in post-secondary education, and indeed in the Pockets of Innovation project, serious games were hardly mentioned...

Assessing the dangers of AI applications in education

Lynch, J. (2017) How AI will destroy education, buZZrobot, 13 November

I'm a bit slow catching up on this (I have a large backlog of...

Scary tales of online learning and educational technology

The Educational Technology Users Group (ETUG) of British Columbia held an appropriately Halloween-themed get together today called 'The Little Workshop of Horrors' at which participants...

Online learning for beginners: 5. When should I use online learning?

This is the fifth of a series of a dozen blog posts aimed at those new to online learning or thinking of possibly doing...