A personal history: 9 The Northern Ireland Troubles and bun hurling at Lakehead University

I am writing an autobiography, mainly for my family, but it does cover some key moments in the development of open and online learning....

A personal history: 5. India and educational satellite TV

I am writing an autobiography, mainly for my family, but it does cover some key moments in the development of open and online learning....

A personal history of open learning and educational technology: 1. The start of the...

I am writing an autobiography, mainly for my family, but it does cover some key moments in the development of open and online learning....

Book review: Research, Writing and Creative Process in Open and Distance Learning

Conrad, D. (ed.) (2023) Research, Writing and Creative Process in Open and Distance Education: Tales from the Field Cambridge UK: Open Book Publishers

Overview

This is...

Back to school: some things to look for

As faculty and instructors return to campus, here are some publications that may be of interest, if you can find the time during the...

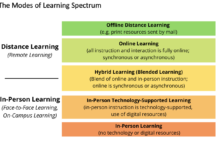

Faculty preferences for mode of delivery following the Covid-19 pandemic

Tyton Partners (2023) Time for class: bridging student and faculty perspectives on digital learning, Boston MA

Muscanell, N. (2023) 2023 Faculty and Technology Report: A...

French translation of the third edition of Teaching in a Digital Age now available

I hope you are having a wonderful summer break. If so all the more reason to read 'Teaching in a Digital Age'.

This is now...

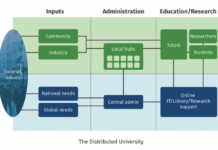

Book Review: Richard Heller’s ‘The Distributed University for Sustainable Higher Education’

Heller, R. (2022) The Distributed University for Sustainable Higher Education SpringerBriefs in Education: Singapore

Good news: this is an open access book. The second good...

Overall reflection on Part IV of the Handbook of ODDE

Overall reflection on Part IV

The reviews of individual chapters in Part IV of the Handbook of ODDE, Chapters 28 to 39, have been posted...

Organization, Leadership and Change in Open, Distance and Digital Education: book review, Part 2

Zawacki-Richter, O. and Jung, I. (2023) Handbook of Open, Distance and Digital Learning Singapore: Springer

Review of 'Organization, leadership and change': Part 2

You may remember I have...