The perversion of the Internet: scraping and selling children’s data from ed tech tools

Human Rights Watch (2022) How Dare They Peep into My Private Life? New York: Human Rights Watch, May 25

The promise and the ideal

I remember...

A Review of Online Learning in 2021

2021: still bad but better

In my review for 2020, I wrote 'Goodbye - and don't come back', a reaction to the horrible year caused...

What happens to online students when the Internet goes down?

Holzweiss, P. and Walker, D. (2021) The Impact of a Regional Crisis on Online Students and Faculty Online Journal of Distance Learning Administration, Vol....

Bridging the Digital Divide: 3: The Fundamentals of Inequality

Goldrick-Rab. S. et al. (2020) #RealCollege During the Pandemic The Hope Centre for College, Community and Justice Philadelphia: Temple University, June, pp. 22

This is the third post...

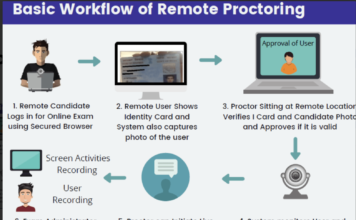

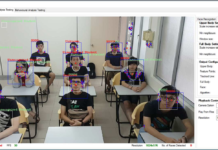

Learning analytics – or learner surveillance?

Crosslin, M. (2019) Is Learning Analytics Synonymous with Learning Surveillance, or Something Completely Different? EduGeek Journal, October 30

This is an excellent, thoughtful article about...

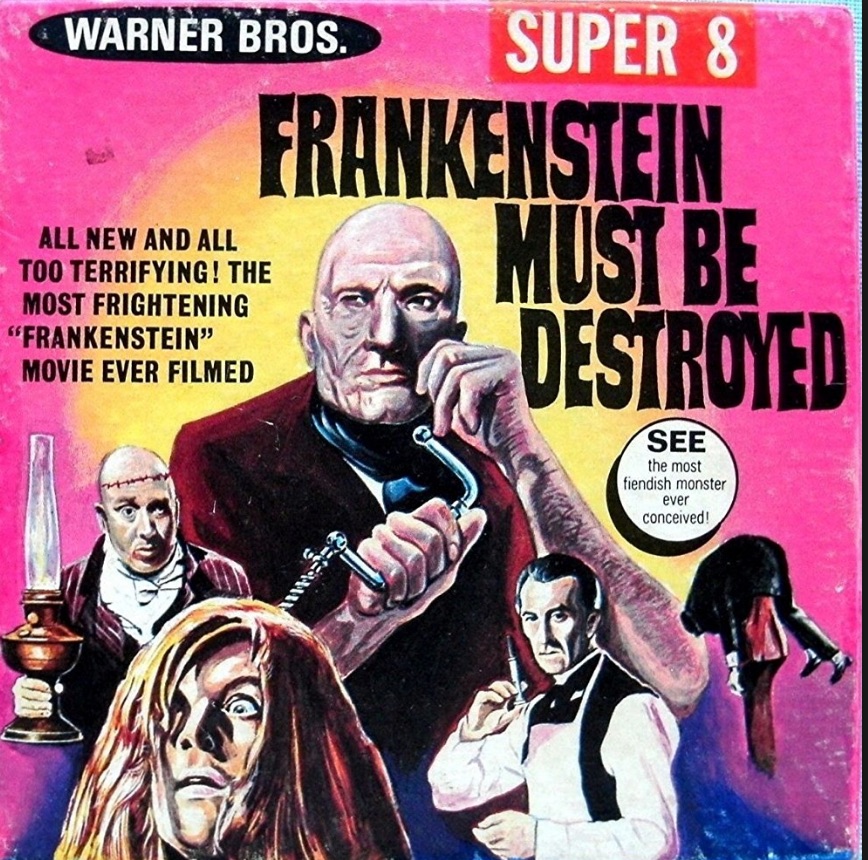

Zuckerberg’s Frankenstein

Prosecutor: Dr. Frankenberg, are you aware that there is a monster roaming the countryside, stealing all the villagers' personal information?

Dr. Frankenberg: Yes, sir, I...

Our responsibility in protecting institutional, student and personal data in online learning

WCET (2018) Data Protection and Privacy Boulder CO: WCET

United States Attorney's Office (2018) Nine Iranians Charged With Conducting Massive Cyber Theft Campaign On Behalf Of...

Developing a next generation online learning assessment system

Universitat Oberta de Catalunya (2016) An Adaptive Trust-based e-assessment system for learning (@TeSLA) Barcelona: UOC

This paper describes a large, collaborative European Commission project headed by...

Appropriate interventions following the application of learning analytics

SAIDE (2015) Siyaphumelela Inaugural Conference May 14th - 15th 2015 SAIDE Newsletter, Vol. 21, No.3

Reading sources in the right order can avoid you having to eat...

Privacy and the use of learning analytics

Warrell, H. (2105) Students under surveillance Financial Times, July 24

Applications of learning analytics

This is a thoughtful article in the Financial Times about the pros...