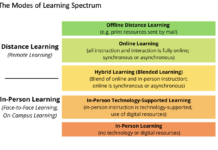

Discussing the strengths and weaknesses of synchronous or asynchronous teaching

The context

I am continuing the process of updating the third version of my open textbook, Teaching in a Digital Age, to take account of...

Defining quality and online learning

The issue

Institutions are struggling to define online or digital learning for their students. In particular, students need to know whether a course requires attendance...

Investigating Indigenous learners’ experience of online learning

Davey, R.C.E. (2019) "It will never be my first choice to do an online course": Examining Experiences of Indigenous Learners Online in Canadian Post-Secondary...

A Review of Online Learning in 2021

2021: still bad but better

In my review for 2020, I wrote 'Goodbye - and don't come back', a reaction to the horrible year caused...

Curriculum design and the teaching of 21st century skills

Rayner, P.J. (2021) Curriculum and program renewal: an introduction Edubytes, November

Chadha, D. (2006) A curriculum model for transferable skills development Engineering Education, Vol.1, No....

Book Review: Reimagining Digital Learning for Sustainable Development

Jagannathan, S. (ed.) (2021) Reimagining Digital Learning for Sustainable Development: How Upskilling, Data Analytics and Educational Technologies Close the Skills Gap Routledge: New York/London,...

12 guiding principles for teaching with technology – still relevant?

Bates, A. (1995) Technology, Open Learning and Distance Education Routledge: New York/London

How much has changed in educational technology over the last 30 years?

In my...

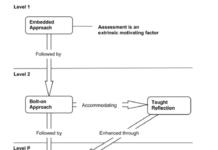

Do we need a theory for blended learning?

The value of a good theory

The psychologist, Kurt Levine, said there is nothing more practical than a good theory. When faced with conflicting facts...

Research showing that virtual learning is less effective than classroom teaching – right?

Cellini, R. S. (2021) How does virtual learning impact students in higher education? Brown Center Chalkboard, Brookings, August 13

Summary of results

This blog post is...

Working on a third edition of ‘Teaching in a Digital Age.’

I've now decided to start on a third edition of 'Teaching in a Digital Age.' I began work on the first edition in 2014...